AI Tools Project (2020-24)

AI Tools - Junior Research Group Project

This project was funded by the Bavarian State Ministry of Science and the Arts, coordinated by the Bavarian Research Institute for Digital Transformation (bidt). Here's a short video teaser (in German, video created for the bidt conference 2021).

About

Many “intelligent” systems today lack interaction: They run “AI as a service” in the background to serve ready-made meals, often presented as suggestions or recommendations. As users, we can decide to accept these or not but as such our role seems limited to passive consumers. Even experts often just receive final output of black-box AI systems: If it is uninformative, they change code and start over. Among humans, we would judge this lack of exchange during tasks as ineffective – neither should we accept it for AI.

How can we enable fluent human-AI interaction?

We address this with the perspective of “AI as a tool”: We envision that humans apply and steer AI with continuous impact and feedback, and combine AI modules from a toolbox. Among the key factors we see continuous interaction, flexible control, appropriation & explainability, as well as ergonomic considerations. We are motivated to develop conceptual and technical foundations and explore application domains that empower millions of people (e.g. data analysis, mobile communication, design, health).

AI Tools

What is an AI tool? These key elements guide our work on such tools:

Interactive: An AI tool is interactively used by humans, without replacing them.

Shaping interaction: An AI tool influences how we actively engage with the overall system and task at hand (e.g. not exclusively a “background service”).

Beyond antropomorphism: Not all interactive AI needs to be presented to users as a "person" or “being” - contrasting AI tools with this trend investigates when this might be beneficial, including concepts inbetween tools and agents.

Empowering: An AI tool is useful if it helps humans in a task, i.e. if it improves a meaningful metric, such as efficiency, effectiveness, or expressiveness.

Consider this analogy with classic tools: With a hand drill, people provide both a control signal (positioning the drill at the target) and power (turning the handle). In contrast, the invention of the power drill provided an external power source (electric power) while keeping humans in control (user still positions the drill). In analogy, we envision that future digital AI tools empower humans with AI as a “power source” for task-relevant intelligence. Note that we use "tool" as a metaphor for interaction and not to imply constraints on the underlying AI capabilities. In this view, we also explore interaction concepts beyond the traditional tools vs agents dichotomy in HCI.

Publications

Here we list selected recent publications that are part of this project. Our group's general publication list is available at our publications page here.

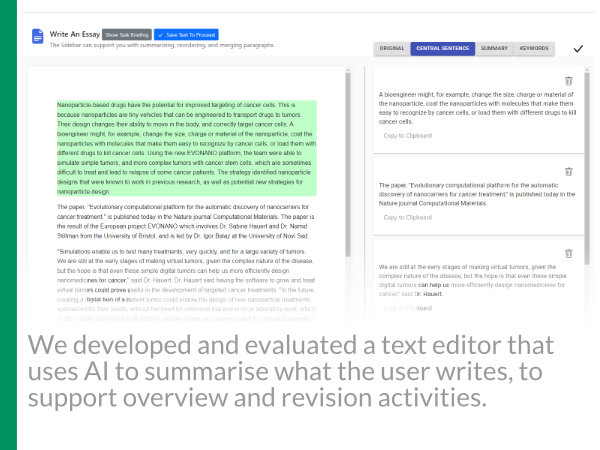

Beyond Text Generation: Supporting Writers with Continuous Automatic Text Summaries (UIST'22)

▹Paper (ACM DL) · Preprint (arXiv)

We explore a new interaction concept and AI role for writing with AI support: The AI neither replaces the writer, nor "checks" or "corrects" them. It rather generates parallel content to empower users in their self-evaluation. Concretely, we developed a text editor that provides continuously updated paragraph-wise summaries as margin annotations, using automatic text summarization. In a user study, these summaries gave writers an external perspective on their writing and helped them to revise their text. More broadly, this work highlights the value of designing AI tools for writers, with Natural Language Processing (NLP) capabilities that go beyond direct text generation and correction.

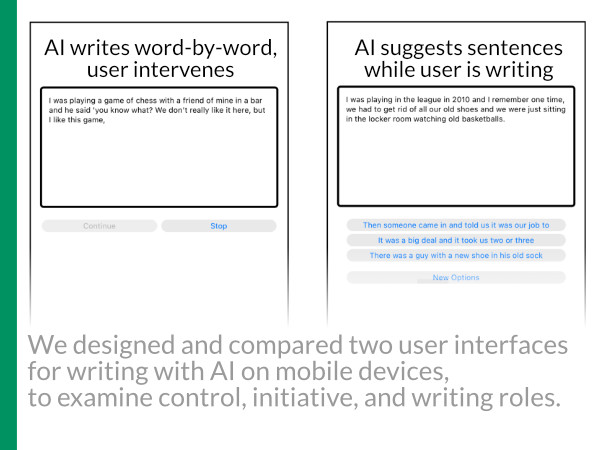

Suggestion Lists vs. Continuous Generation: Interaction Design for Writing with Generative Models on Mobile Devices Affect Text Length, Wording and Perceived Authorship (MuC'22)

▹Paper (ACM DL) · Preprint (arXiv)

Neural language models have the potential to support human writing. We designed and compared two user interfaces for writing with AI on mobile devices, which manipulate levels of initiative and control: 1) Writing with continuously generated text, the AI adds text word-by-word and the user steers it. 2) Writing with suggestions, the AI suggests phrases and the user selects them from a list. In a supervised online study (N=18), participants used these prototypes and a baseline without AI. Our findings add new empirical evidence on the impact of UI design decisions on user experience and output with co-creative systems.

Awarded with a "Best Paper Honourable Mention Award" at MuC'22.

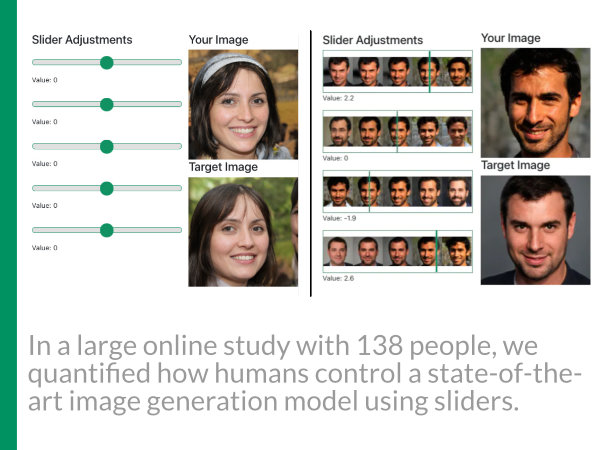

GANSlider: How Users Control Generative Models for Images using Multiple Sliders with and without Feedforward Information (CHI'22)

▹Paper (ACM DL) · Preprint (arXiv) · Video preview (YouTube)

Sliders are the most commonly used user interface element for interacting with generative models for images. For example, AI researchers use such sliders to explore trained models. Moreover, sliders are used in products (e.g. Adobe Photoshop) to allow non-expert users to apply such models. In this project, we quantified key aspects of this interaction, propose an improved slider design, and extract underlying control strategies. Our insights are relevant for researchers working on models and related interfaces, and for industry practitioners who want to empower their users with state-of-the-art generative AI.

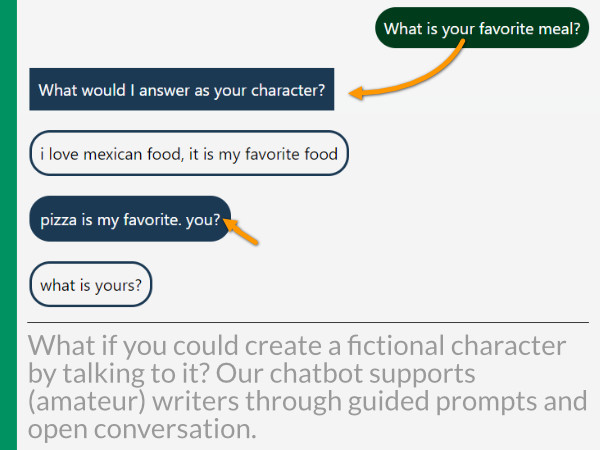

CharacterChat: Supporting the Creation of Fictional Characters through Conversation and Progressive Manifestation with a Chatbot (C&C'21)

▹Paper (ACM DL) · Preprint (arXiv) · Conference presentation (YouTube) · Chatbot in action (YouTube)

What if you could create a fictional character by talking to it? This paper investigates the potential of using a conversational user interface (a chatbot) to support writers in creating new fictional characters. The key idea is that writers turn the chatbot into their imagined character by having a conversation with it. The paper reports on the development of this concept, an implemented prototype, and insights from two user studies with writers.

The Impact of Multiple Parallel Phrase Suggestions on Email Input and Composition Behaviour of Native and Non-Native English Writers (CHI'21)

▹Paper (ACM DL) · Preprint (arXiv) · Presentation (YouTube) · Medium article

This paper presents an in-depth analysis of the impact of phrase suggestions from a neural language model on user behaviour in email writing. Our study with 156 people is the first to compare different numbers of parallel text suggestions, and use by native and non-native English writers. Our results reveal (1) benefits for ideation, and costs for efficiency, when suggesting multiple phrases; (2) that non-native speakers benefit more from more suggestions; and (3) further insights into behaviour patterns. We discuss implications for research and design, and the vision of supporting writers with AI instead of replacing them.

Awarded with a "Best Paper Honourable Mention Award" at CHI'21.

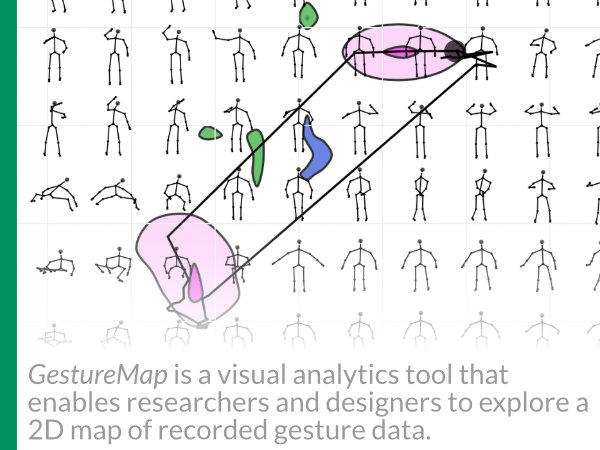

GestureMap: Supporting Visual Analytics and Quantitative Analysis of Motion Elicitation Data by Learning 2D Embeddings (CHI'21)

▹Paper (ACM DL) · Preprint (arXiv) · Presentation (YouTube)

GestureMap is a visual analytics tool that directly visualises a space of gestures as an interactive 2D map, using a Variational Autoencoder. We evaluated GestureMap and its concepts with eight experts and an in-depth analysis of published datasets. Our findings show how GestureMap facilitates exploring large datasets and helps researchers to gain a visual understanding of gesture spaces. It further opens new directions, such as comparing users' gesture proposals across many user studies. We discuss implications for gesture elicitation studies and research.

Eliciting and Analysing Users' Envisioned Dialogues with Perfect Voice Assistants (CHI'21)

▹Paper (ACM DL) · Preprint (arXiv)

We present a dialogue elicitation study to assess how users envision conversations with a perfect voice assistant (VA). In an online survey, 205 participants were prompted with everyday scenarios, and wrote the lines of both user and VA in dialogues that they imagined as perfect. We analysed the dialogues with text analytics and qualitative analysis. The majority envisioned dialogues with a VA that is interactive and not purely functional; it is smart, proactive, and has knowledge about the user. Attitudes diverged regarding the assistant's role as well as it expressing humour and opinions.

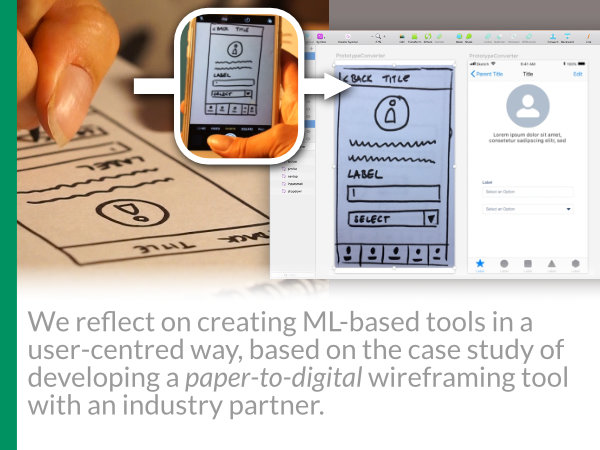

Paper2Wire - A Case Study of User-Centred Development of Machine Learning Tools for UX Designers (Proc. Mensch und Computer 2020)

▹Paper (ACM DL) · Presentation (YouTube) · Medium article

This paper reflects on a case study of a user-centred concept development process for a Machine Learning (ML) based design tool, conducted at an industry partner. The resulting concept uses ML to match graphical user interface elements in sketches on paper to their digital counterparts to create consistent wireframes. A user study (N=20) with a working prototype shows that this concept is preferred by designers, compared to the previous manual procedure. Reflecting on our process and findings we discuss lessons learned for developing ML tools that respect practitioners' needs and practices.

Awarded with a "Best Paper Honourable Mention Award" at MuC'20.

LanguageLogger: A Mobile Keyboard Application for Studying Language Use in Everyday Text Communication in the Wild (Proc. ACM Hum.-Comput. Interact., June 2020)

▹Paper (ACM DL) · Project website

We present a new keyboard app as a research tool to collect data on language use in everyday mobile text communication (e.g. chats). Our approach enables researchers to collect such data during unconstrained natural typing without logging readable messages to preserve privacy. We achieve this with a combination of three customisable text abstraction methods that run directly on participants' phones. Our research tool thus facilitates diverse interdisciplinary projects interested in language data, e.g. in HCI, AI, Linguistics, and Psychology.

Get in touch with us if you would like to deploy the tool in your research project!

▹Paper (ACM DL) · Preprint (arXiv) · Presentation (YouTube)

The "AI Tools" project contrasts presenting AI as a tool with the currently common presentation as an agent. Such agents might convey having a certain personality. In this interdisciplinary work across HCI, AI, and Psychology, we ask how such AI personality might be designed in an informed way: We present the first systematic analysis of personality dimensions to describe speech-based conversational AI agents, following the psycholexical approach from Psychology. A factor analysis reveals that the commonly used Big Five model for human personality does not adequately describe AI personality. We propose alternative dimensions as a step towards developing an AI agent personality model.

Awarded with a "Best Paper Honourable Mention Award" at CHI'20.

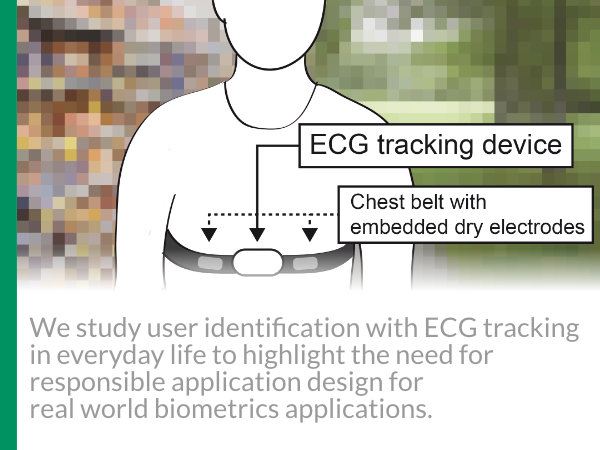

Heartbeats in the Wild: A Field Study Exploring ECG Biometrics in Everyday Life (CHI'20)

▹ Paper (ACM DL) · Preprint (arXiv) · Presentation (YouTube)

Interactive AI systems process user data. More and more, this includes physiological data. This paper reports on the first in-depth study of one such source - electrocardiogram (ECG) data - in everyday life. Our study contributes to the bigger picture that new biometric modalities are now viable to be measured in daily life. A broader implication is that it becomes increasingly important not to adopt a simplified view on “biometrics” as a single concept. Working with everyday data, as in our study here, thus highlights the need for responsible application design for real world biometrics applications.

Awarded with a "Best Paper Honourable Mention Award" at CHI'20.

What is "Intelligent" in Intelligent User Interfaces? A Meta-Analysis of 25 Years of IUI (IUI'20)

▹Paper (ACM DL) · Preprint (arXiv) · Medium article · bidt blog article

AI is a hyped term but what does it actually mean for technology to be "intelligent"? We followed a bottom-up approach to analyse the emergent meaning of intelligence in the research community of Intelligent User Interfaces (IUI). We processed all IUI papers from 25 years to extract how researchers use the term "intelligent". Our results show a growing variety over the years of what is referred to as intelligent and how it is characterised as such. At the core, we found the key aspects of automation, adaptation, and interaction.

Workshops

Interested in discussing related ideas? We are present at the following workshops / events:

- 04.09.2022: Workshop on User-Centered Artificial Intelligence (Workshop at Mensch und Computer '22)

We are co-organising this workshop.

Workshop website - 10.05.2022: GenAIHCI: Generative AI and Computer Human Interaction (Workshop at CHI'22)

Workshop website

Our paper: How to Prompt? Opportunities and Challenges of Zero- and Few-Shot Learning for Human-AI Interaction in Creative Applications of Generative Models - 21.03.2022: HAI-GEN 3rd Workshop on Human-AI Co-Creation with Generative Models (Workshop at IUI'22)

We are part of the program committee for this workshop.

Workshop website - 05.09.2021: Workshop on User-Centered Artificial Intelligence (Workshop at Mensch und Computer '21)

We are co-organising this workshop.

Workshop website

-

08. / 09.05.2021: Operationalizing Human-Centered Perspectives in Explainable AI (Workshop at CHI'21)

Workshop website

Our paper: Interactive End-User Machine Learning to Boost Explainability and Transparency of Digital Footprint Data -

20.04.2021: Bridging Human-Computer Interaction and Natural Language Processing (Workshop at EACL'21)

Workshop website

Our position paper: Methods for the Design and Evaluation of HCI+NLP Systems -

13.04.2021: HAI-GEN 2nd Workshop on Human-AI Co-Creation with Generative Models (Workshop at IUI'21)

Workshop website

Our position paper: Nine Potential Pitfalls when Designing Human-AI Co-Creative Systems -

13.04.2021: CUI@IUI: Theoretical and Methodological Challenges in Intelligent Conversational User Interface Interactions (Workshop at IUI'21)

Workshop website

Our position paper: Chatbots for Experience Sampling-Initial Opportunities and Challenges -

06.09.2020: Workshop on User-Centered Artificial Intelligence (Workshop at Mensch und Computer '20)

We are co-organising this workshop.

Workshop website

Our position paper: Autocompletion as a Basic Interaction Concept for User-Centered AI -

25.04.2020: Artificial Intelligence for HCI: A Modern Approach (Workshop at CHI'20)

Workshop website

Our position paper: Timing AI in HCI: Computational Approaches to Temporal Strategies for Mixed-Initiative Intelligent Systems -

17.03.2020: AI Methods for Adaptive User Interfaces (Workshop at IUI'20)

Workshop website

Our position paper: Probabilistic GUI Representations for Adaptive User Interfaces

Collaborations

For various projects we're working with great people from other labs, including at:

- LMU Munich, Germany (groups: HCI, Ubiquitous Media, Psychology, Biophysics)

- Bundeswehr University Munich, Germany (Usable Security and Privacy)

- University of Glasgow, UK

- Aalto University, Finland (groups: user interfaces, graphics, games / ML)