Project: Raised

This is a project of the CIIS course (WS 2019/20). The content below was created by Joshua Wohlleben.

Raised - An Uplifting Technology

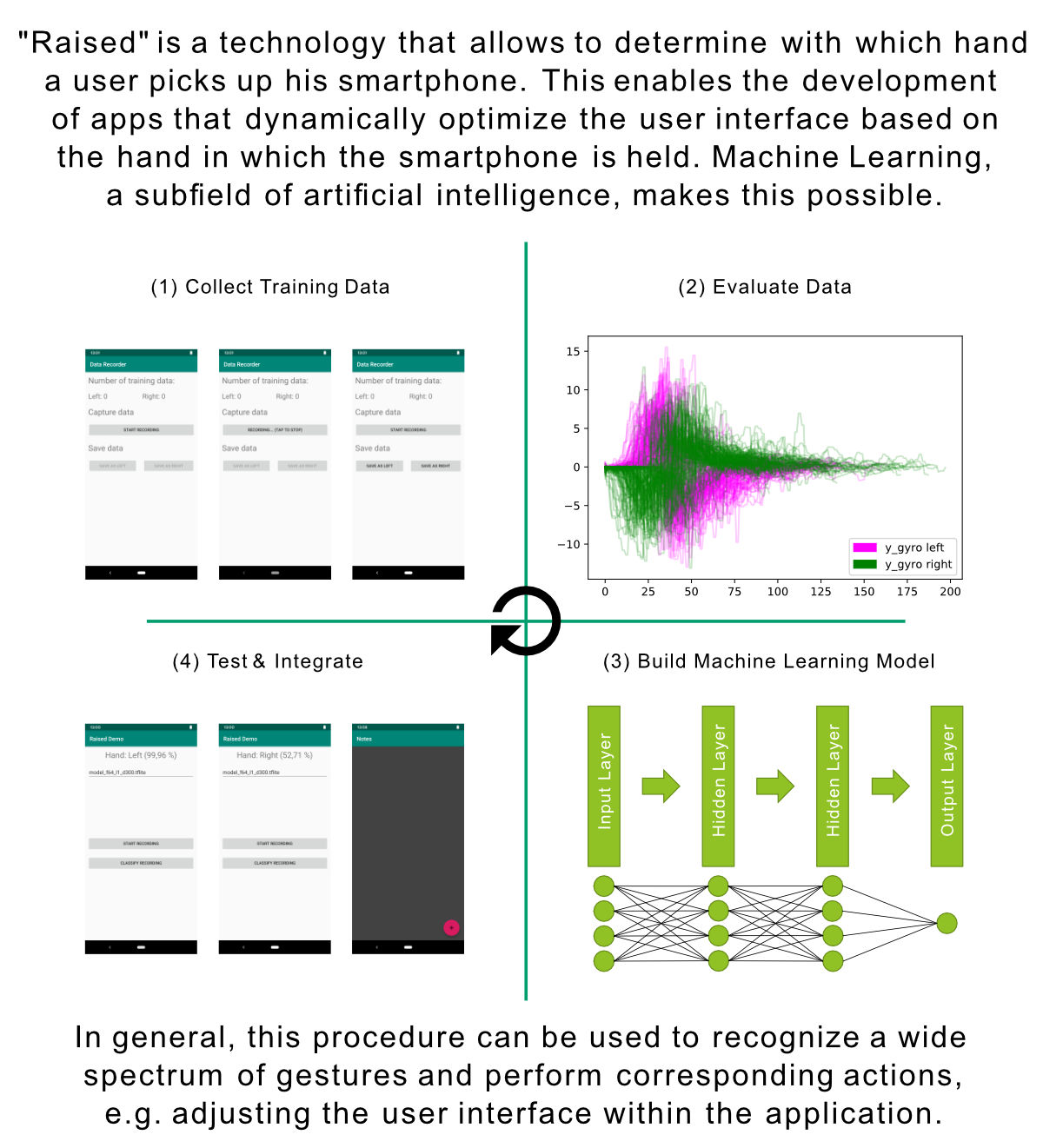

Many people know this problem: When using apps on a smartphone or tablet, they are often optimized for use with the right hand, or you even need two hands to operate the app. This makes it difficult to use the phone one-handed or with the left hand. This is where Raised comes in: Raised uses Machine Learning, a subclass of artificial intelligence, to automatically detect with which hand a user lifts his smartphone, and then adjusts the user interface so that important elements are easily accessible with one hand.

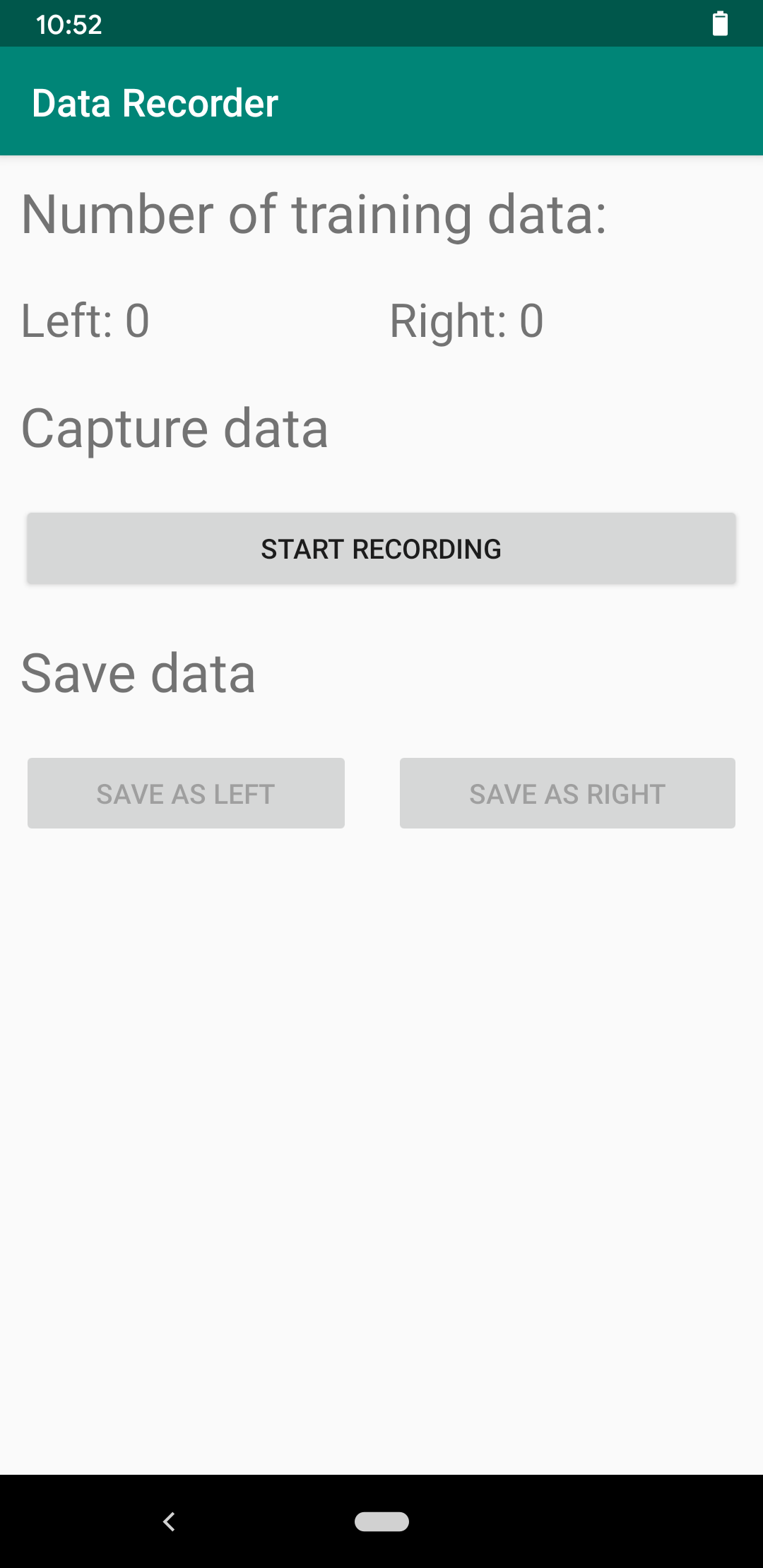

The basis of this technology is the Machine Learning model, which is designed to predict the user's movement. In principle, this is a neural network that is "fed" with data, whereby this model starts to expand neural connections within the network. The model then "learns" to recognize relationships in this data, enabling it to identify new, unknown data with a similar form. For this to work, training data must first be collected. The DataRecorder app does this by recording the data from the Accelerometer and Gyroscope during a lifting movement. This data is saved in CSV file format for easy evaluation later. In addition, the information about the lifting hand is stored.

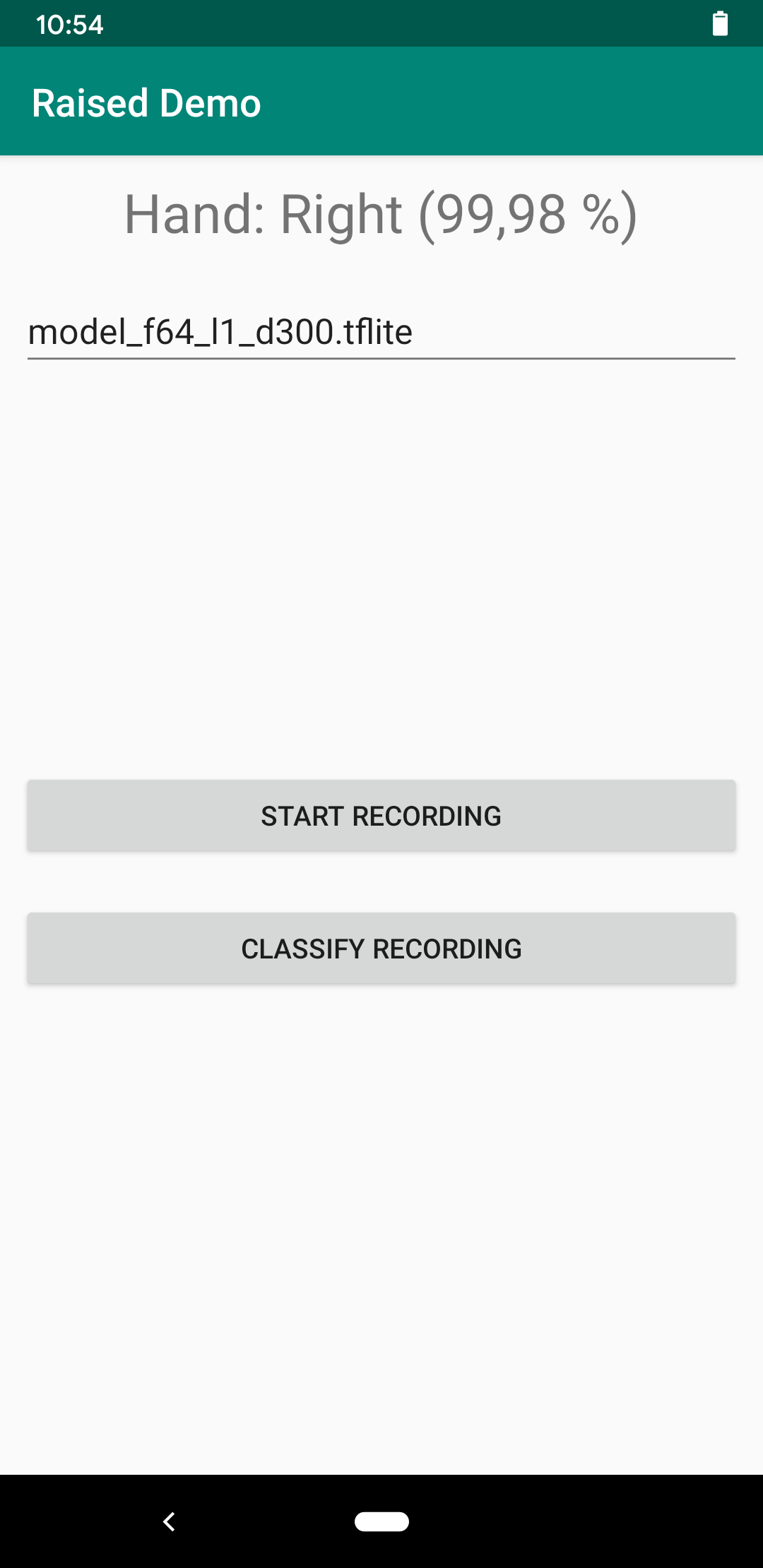

After a successful evaluation of the data, a machine learning model can be designed and trained based on this data. For this purpose TensorFlow Keras and TensorFlow Lite were used. The input data is data that is recorded when the user lifts his smartphone. The trained model then passes the prediction about the used hand on to the application, which can then react accordingly, e.g. by adjusting the user interface.

After testing the model with the RaisedDemo app on the smartphone, it can now be integrated into an application. In this case, the Raised technology was integrated into a note app. This supports basic features like adding, editing and removing notes. After the app has been started, it can be switched to listening mode. Thus it waits for the user to raise his smartphone. Once moved, the application automatically adjusts the user interface, in this case the add button for notes, to the user's hand.

Raised technology is still at the beginning. After further testing and building a larger knowledge base, the technology could be further developed so that it can automatically detect when the user lifts the phone and also react to hand changes in mid-air. In general, a wide spectrum of gestures can be detected with this approach.

See Raised in action here: